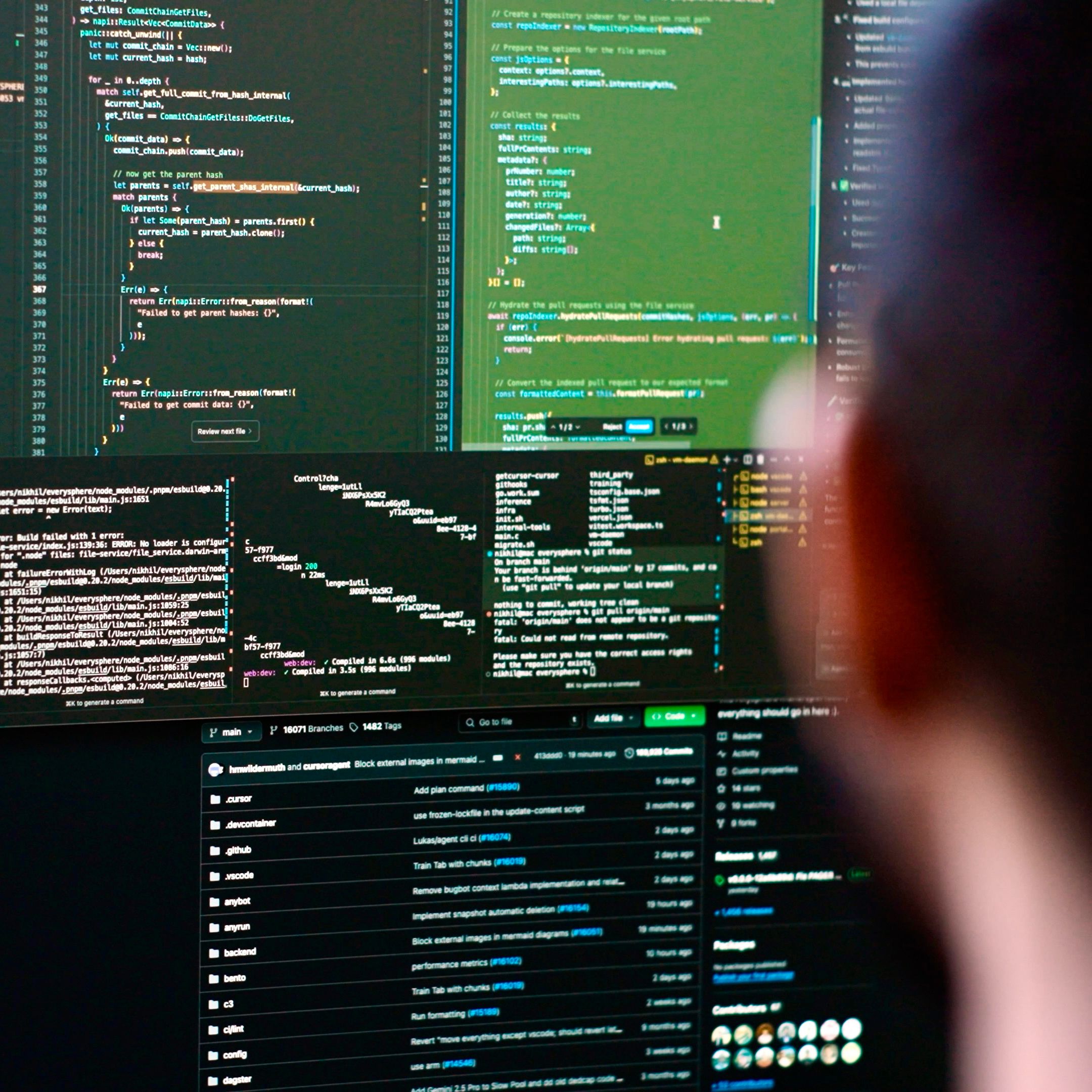

import torch

import torch.nn as nn

from torch.utils.data import DataLoader

from torchvision import datasets

def get_dataloaders(batch_size=64):

transform = transforms.Compose([transforms.ToTensor()])

train = datasets.MNIST(root="data", train=True, download=True, transform=transform)

test = datasets.MNIST(root="data", train=False, download=True, transform=transform)

return DataLoader(train, batch_size=batch_size, shuffle=True), DataLoader(test, batch_size=batch_size)

class MLP(nn.Module):

def __init__(self, hidden=128):

super().__init__()

self.net = nn.Sequential(

nn.Flatten(),

nn.Linear(28*28, hidden),

nn.ReLU(),

nn.Linear(hidden, 10),

)

def forward(self, x):

return self.net(x)

def train_model(epochs=1, lr=1e-3, device=None):

device = device or ("cuda" if torch.cuda.is_available() else "cpu")

model = MLP().to(device)

opt = torch.optim.Adam(model.parameters(), lr=lr)

loss_fn = nn.CrossEntropyLoss()

train_loader, _ = get_dataloaders()

+ # Seed for reproducibility

+ torch.manual_seed(42)

+ if device == "cuda":

+ torch.cuda.manual_seed_all(42)

+ # AMP + Scheduler

+ scaler = torch.cuda.amp.GradScaler(enabled=(device=="cuda"))

+ scheduler = torch.optim.lr_scheduler.CosineAnnealingLR(opt, T_max=epochs)

model.train()

for epoch in range(epochs):

total, correct = 0, 0

for x, y in tqdm(train_loader, desc=f"epoch {epoch+1}"):

x, y = x.to(device), y.to(device)

opt.zero_grad(set_to_none=True)

logits = model(x)

loss = loss_fn(logits, y)

loss.backward()

opt.step()

scaler.scale(loss).backward()

scaler.unscale_(opt)

+ torch.nn.utils.clip_grad_norm_(model.parameters(), max_norm=1.0)

scaler.step(opt)

scaler.update()

+ preds = logits.argmax(dim=1)

+ total += y.size(0)

+ correct += (preds == y).sum().item()

+ acc = correct / max(1, total)

scheduler.step()

+ print(f"epoch {epoch+1}: acc={acc:.3f}")

return model`,